TASC Notes - Part 5

These are the notes summarising what was covered in The Art and Science of CAP part 5, one episode in a mini series with Daniel Hutzel to explore the philosophy, the background, the technology history and layers that support and inform the SAP Cloud Application Programming Model.

For all resources related to this series, see the post The Art and Science of CAP.

This episode started with a review of the previous episode (part 4), based on the notes for that episode.

New cds REPL options

At around 17:30 Daniel introduces some of his very recent development work, adding features to the cds REPL, which we'll hopefully see in the next CAP release (update: we do indeed - see the cds repl enhancements of the December 2024 release notes!):

-r | --run

Runs a cds server from a given CAP project folder, or module name.

You can then access the entities and services of the running server.

It's the same as using the repl's builtin .run command.

-u | --use

Loads the given cds feature into the repl's global context. For example,

if you specify ql it makes the cds.ql module's methods available.

It's the same as doing {ref,val,xpr,...} = cds.ql within the repl. As the description for --run says, it's a shortcut for starting the REPL and then issuing .run <project>.

The --use feature is a little more intriguing, in that it will import, into the REPL's global environment, the values exported by the specified feature. Daniel shows an example for cds.ql which -- at least in his bleeding edge developer version -- includes new, simple utility functions ref, val, xpr and expand that make it easier to manually construct CQN representations of queries (which can otherwise be quite tricky with all the bracket notation) when working in the REPL.

Here are some examples:

ref`foo.bar` // -> { ref: [ 'foo', 'bar' ] }val`foo.bar` // -> { val: 'foo.bar' }expand`foo.bar` // -> { ref: [ 'foo', 'bar' ], expand: [ '*' ] }and even:

expand (`foo.bar`, where`x<11`, orderBy `car`, [ ref`a`, ref`b`, xpr`a+b`])which emits:

{

ref: [ 'foo.bar' ],

where: [ { ref: [ 'x' ] }, '<', { val: 11 } ],

orderBy: [ { ref: [ 'car' ] } ],

expand: [

{ ref: [ 'a' ] },

{ ref: [ 'b' ] },

{

xpr: [ { ref: [ 'a' ] }, '+', { ref: [ 'b' ] } ]

}

]

}Infix filters

While building up this example, Daniel remarks that:

"CAP CDL has infix filters and so has CQN".

I wanted to take a moment to think about this, and dig into Capire, for two reasons:

- it was important to understood the meaning and implication of the term "infix filter" and recognise them at the drop of a hat

- these two DSLs (CDL and CQN) are, at least in my mind, orthogonal to one another in a couple of dimensions:

| CDS DSLs | Human | Machine |

|---|---|---|

| Schema | CDL | CSN |

| Queries | CQL | CQN |

... and I was curious to understand how an infix filter would be meaninfgul across the two differing pairs of DSLs.

So I took the DSL pair for queries first, and found the With Infix Filters section (in the CQL chapter, which makes sense as it's essentially a syntactical construct for humans, not machines), which has this example:

SELECT books[genre='Mystery'].title from Authors

WHERE name='Agatha Christie'Given that the term "infix" implies "between" (as in infix notation), the [genre='Mystery'] part is the infix filter in this example1.

But what about infix filters in CDL? Well, you can add a filter to an association, as shown in the Association-like calculated elements section of the CDL chapter with this example:

entity Employees {

addresses : Association to many Addresses;

homeAddress = addresses [1: kind='home'];

}And still in a CDL context, but moving from defining to publishing, there's another example in the Publish Associations with Filter section:

entity P_Authors as projection on Authors {

*,

books[stock > 0] as availableBooks

};Diving into query objects

To tie this back to what Daniel was showing in the REPL, we can resolve the CQL example above (looking for an author's books in the "Mystery" genre) into its canonical object representation in CQN, in the REPL, using an explicit call to cds.parse.cql like this:

> cds.parse.cql(`

... SELECT books[genre='Mystery'].title

... from Authors

... where name='Agatha Christie'

... `)

{

SELECT: {

from: { ref: [ 'Authors' ] },

columns: [

{

ref: [

{

id: 'books',

where: [ { ref: [ 'genre' ] }, '=', { val: 'Mystery' } ]

},

'title'

]

}

],

where: [ { ref: [ 'name' ] }, '=', { val: 'Agatha Christie' } ]

}

}Alternatively, and steering us back on track, we can use the literate-style "query building" functions similar to what Daniel did in the subsequent SELECT call (around 21:43), like this:

> SELECT `books[genre='Mystery'].title` .from `Authors` .where `name='Agatha Christie'`

Query {

SELECT: {

from: { ref: [ 'Authors' ] },

columns: [

{

ref: [

{

id: 'books',

where: [ { ref: [ 'genre' ] }, '=', { val: 'Mystery' } ]

},

'title'

]

}

],

where: [ { ref: [ 'name' ] }, '=', { val: 'Agatha Christie' } ]

}

}Trying out an infix filter

To complete the circle and also experience for myself how it feels to instantiate queries and run them in the REPL, I took this same CQL infix example:

SELECT books[genre='Mystery'].title from Authors

WHERE name='Agatha Christie'... and constructed an equivalent query on the Northbreeze dataset2. Doing that helped me think about what is going on in this query, which is the traversal of a relationship (Authors -> books) with a restriction on the destination entity (via an infix filter) as well as on the source entity.

Here's that equivalent query:

SELECT Products[Discontinued=false].ProductName from northbreeze.Suppliers

WHERE CompanyName='Tokyo Traders'And here it is being constructed:

> q1 = cds.parse.cql(`

... SELECT Products[Discontinued=false].ProductName

... from northbreeze.Suppliers

... WHERE CompanyName='Tokyo Traders'

... `)

{

SELECT: {

from: { ref: [ 'northbreeze.Suppliers' ] },

columns: [

{

ref: [

{

id: 'Products',

where: [ { ref: [ 'Discontinued' ] }, '=', { val: false } ]

},

'ProductName'

]

}

],

where: [ { ref: [ 'CompanyName' ] }, '=', { val: 'Tokyo Traders' } ]

}

}Executing queries

Note that what's produced here is a query object (in CQN) but not something that is immediately await-able (see the notes from part 4).

This is in contrast to the query object that is produced from the literate query building approach which looks like this, with an assignment to q23:

q2 = SELECT

.columns(`Products[Discontinued=false].ProductName`)

.from `northbreeze.Suppliers`

.where `CompanyName='Tokyo Traders'`This produces the same CQN object ... but wrapped within a Query object:

Query {

SELECT: {

columns: [

{

ref: [

{

id: 'Products',

where: [ { ref: [ 'Discontinued' ] }, '=', { val: false } ]

},

'ProductName'

]

}

],

from: { ref: [ 'northbreeze.Suppliers' ] },

where: [ { ref: [ 'CompanyName' ] }, '=', { val: 'Tokyo Traders' } ]

}

}To execute the first query object, we must pass it to cds.run:

> await cds.run(q1)

[

{ Products_ProductName: 'Ikura' },

{ Products_ProductName: 'Longlife Tofu' }

]but the Query-wrapped CQN object is directly await-able:

> await q2

[

{ Products_ProductName: 'Ikura' },

{ Products_ProductName: 'Longlife Tofu' }

]The OData V4 equivalent

If you're curious to understand how this query might be represented in OData, and see the data for yourself, here's the corresponding query operation, shown here4 with extra whitespace to make it easier to read:

https:

/odata/v4/northbreeze

/Suppliers

?$expand=Products(

$select=ProductName;

$filter=Discontinued eq false

)

&$filter=CompanyName eq 'Tokyo Traders'This query produces this entityset result:

{

"@odata.context": "$metadata#Suppliers(Products(ProductName,ProductID))",

"value": [

{

"SupplierID": 4,

"CompanyName": "Tokyo Traders",

"ContactName": "Yoshi Nagase",

"ContactTitle": "Marketing Manager",

"Address": "9-8 Sekimai Musashino-shi",

"City": "Tokyo",

"Region": "NULL",

"PostalCode": "100",

"Country": "Japan",

"Phone": "(03) 3555-5011",

"Fax": "NULL",

"HomePage": "NULL",

"Products": [

{

"ProductID": 10,

"ProductName": "Ikura"

},

{

"ProductID": 74,

"ProductName": "Longlife Tofu"

}

]

}

]

}CQL > SQL

Wrapping up this section, Daniel highlights a couple of the major enhancements to SQL that are in CQL:

- associations and path expressions

- nested projections

And this caused me to stare a little bit more at the examples, such as this one:

SELECT.from`foo.bar[where x<11 order by car]{ a, b, a + b}`This underlines the abstract nature of CQL: have you noticed that we have been constructing CQN objects -- query objects -- with no relation (no pun intended) to real metadata? The query objects produced make little sense in that there is no foo.bar, there is no x or car5, and so on.

But that is irrelevant when working at the abstract level of the CQL/CQN DSL pairing, because it's only when the query object is sent to a database service that it gets translated into something "real". And that is something quite beautiful.

Composite services and mashups

At around 24:15 Daniel picks up a thread from a previous episode in this series, and explains more about how mixins, from Aspect Oriented Programming, can be used to flatten hierarchies and bring independent services together to form a composite application.

How? By mashing up the services using the aspect-based power of CDL.

In the cloud-cap-samples repo, there are various CAP services in separate directories, such as bookshop and reviews.

There's also bookstore which is a composite of those two services. To have these services know about each other, the NPM workspace concept is used, in that the root package.json file includes:

"workspaces": [

"./bookshop",

"./bookstore",

"./common",

"./data-viewer",

"./fiori",

"./hello",

"./media",

"./orders",

"./loggers",

"./reviews"

]Note that @capire/bookshop and @capire/reviews, both available via this workspace concept in their respective directories, are declared as dependencies in bookstore's package.json file:

{

"name": "@capire/bookstore",

"version": "1.0.0",

"dependencies": {

"@capire/bookshop": "*",

"@capire/reviews": "*",

"...": "*"

},

"...": "..."

}With that established, and via bookstore's index.cds file:

namespace sap.capire.bookshop; //> important for reflection

using from './srv/mashup';we get to srv/mashup.cds which is enlightening. Here's one section of it:

using { sap.capire.bookshop.Books } from '@capire/bookshop';

using { ReviewsService.Reviews } from '@capire/reviews';

extend Books with {

reviews : Composition of many Reviews on reviews.subject = $self.ID;

rating : type of Reviews:rating; // average rating

numberOfReviews : Integer @title : '{i18n>NumberOfReviews}';

}This is the heart of the mashup, and very similar to the one illustrated in Flattening the hierarchy with mixins, where the Books entity from @capire/bookshop, is mashed up with the Reviews entity from @capire/reviews. Note that both these services are independent, neither of them know about each other, and neither is "owned" by bookstore.

But in the mashup context that doesn't matter, and the aspect oriented modelling is what makes this both possible, and indeed simple. After a formal introduction (using...) they are wired together in a single line of code declaring the Composition.

Like a dating service for domain entities.

Local and remote services

At around 28:21, given this mashup, Daniel walks us through how these services interact with each other specifically at design time with cds watch6.

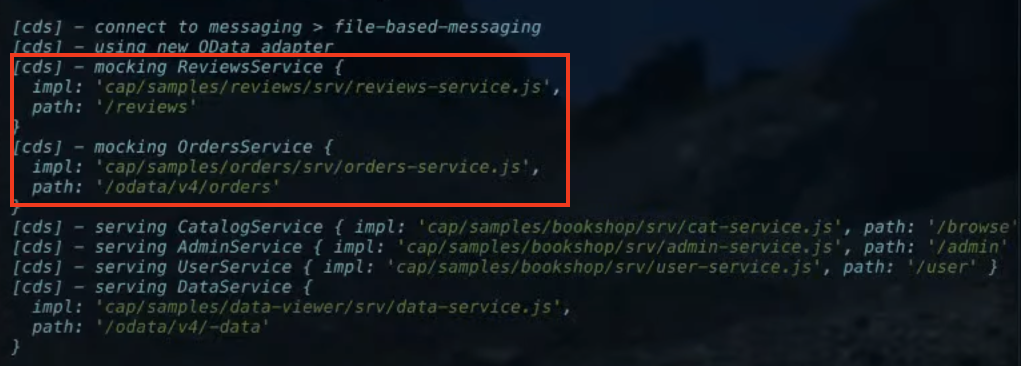

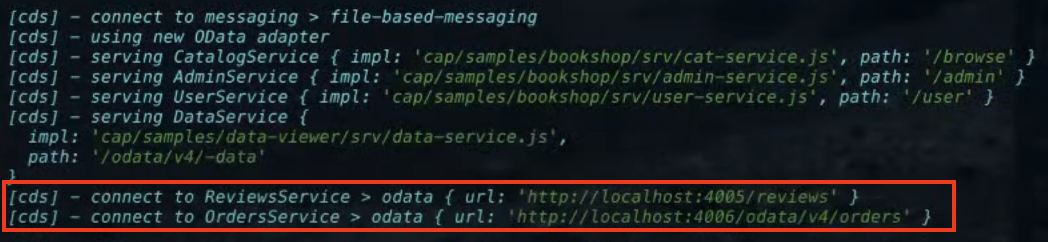

This is a great reminder of what we covered in the previous part, which you can find summarised in the Tight loops, design time affordances and service integration section of the previous episode's notes. Here, ReviewsService and OrdersService are mocked at first because that CAP server is the only one running (locally, on 4004):

but when these two services are started locally too, in separate CAP servers on 4005 and 4006 respectively, a local connection to both is possible, and made:

Messaging and "grow as you go"

Once the services in this "bookstore" constellation were all up and running, and Daniel had resolved the authorisation issue with a small but precise golden hammer, specificially and temporarily commenting out this reject, in restrict.js within the libx/_runtime/common/generic/auth/ section of @sap/cds (don't do this at home, kids!):

const restrictedCount = await _getRestrictedCount(req, this.model, resolvedApplicables)

if (restrictedCount < unrestrictedCount) {

reject(req, getRejectReason(req, '@restrict', definition, restrictedCount, unrestrictedCount))

}... it was time to become familiar with inter-service messaging. Which just works, out of the box, with zero configuration, in CAP's classic grow as you go approach to making the lives of developers, especially in design time, as easy as possible.

When Daniel entered a review on the Vue.js based frontend served by the CAP server serving ReviewsService on 4005, we saw in the logs of that CAP server something like this:

< emitting: reviewed { subject: '201', count: 2, rating: 4.5 }and in the logs of the CAP server on 4004, we saw a corresponding message something like this:

> received: reviewed { subject: '201', count: 2, rating: 4.5 }This is such a great example of "the simplest thing that could possibly work", and indeed it works very well! At design time, you get messaging out of the box powered by a file-based mechanism, as we can see from the logs:

[cds] - connect to messaging > file-based-messagingand that file (that Daniel and I were trying to remember the name of) is .cds-msg-box. Like .cds-services.json, it is a design time only artifact:

- hidden (starts with a

.) - in the developer user's home directory (

~/)

When emitting and receiving is working as planned, this file is normally empty. To look what gets written to it, you have to stop the receiver (so that the messages are not consumed) and take a look. Stopping the "bookstore" CAP server on 4004, and then creating another review, causes, as expected, another record to be written to the log of the ReviewsService CAP server on 4005, like this:

< emitting: reviewed { subject: '207', count: 2, rating: 2 }Inside the ~/.cds-msg-box is an event record that looks like this:

ReviewsService.reviewed {"data":{"subject":"207","count":2,"rating":2},"headers":{"x-correlation-id":"9ad1c255-cde7-45f3-9943-7ff702691de4"}}And it almost goes without saying that as soon as the CAP server on 4004 is restarted (in watch mode of course) that record sitting in ~/.cds-msg-box is consumed:

> received: reviewed { subject: '207', count: 2, rating: 2 }Exploring agnostic event processing in the REPL

At around 42:04 Daniel doubles down on what we've just experienced in this inter-service event coordination by diving once again into the cds REPL to (ultimately) show how similar requests and events are in CAP.

This is reflected in the srv.send(request) and srv.emit(event) methods, but also in how Daniel described his choice of parameter names7 in handlers in this area, which I see like this:

eve The eventing "superclass" parent

|

+-- req a request (synchronous request/response context)

|

+-- msg a message (asynchronous event message context)At the REPL prompt, he creates a couple of cds.Service instances, which are "just event dispatchers", and adorns each with a handler. Concisely, first here is b:

> (b = new cds.Service).before('*', eve => console.log(eve.event, eve.data))

Service {

_handlers: {

_initial: [],

before: [ EventHandler { before: '*', handler: [Function (anonymous)] } ],

on: [],

after: [],

_error: []

},

name: 'Service',

options: {}

}The handler's function signature uses eve as the parameter name, to underline the point above, in that it doesn't matter whether a request (req) or a message (msg) is (sent and) received, what matters is that it's "just an event (with a small 'e') handler".

Next, a8:

> (a = new cds.Service).on('some event', msg => b.send('hey see', msg))

Service {

_handlers: {

_initial: [],

before: [],

on: [

EventHandler {

on: 'some event',

handler: [Function (anonymous)]

}

],

after: [],

_error: []

},

name: 'Service',

options: {}

}And now for the events themselves. First, a synchronous request with send:

> await a.send('some event', {foo:11})

hey see Request { method: 'some event', data: { foo: 11 } }Now for an asynchronous message with emit:

> await a.emit('some event', {foo:11})

hey see EventMessage { event: 'some event', data: { foo: 11 } }Different! But handled in the same way.

Interceptor pattern and Request vs EventMessage handling

Well. Almost the same. Directly following this demonstration, at around 47:25, Daniel highlights a subtle but key difference, in the context of the on handlers, which -- for Request objects -- follow the interceptor stack pattern:

"When processing requests,

.on(request)handlers are executed in sequence on a first-come-first-serve basis: Starting with the first registered one, each in the chain can decide to call subsequent handlers via next() or not, hence breaking the chain."

The existing service b (in the current REPL session) currently has a single handler, specifically for the before phase:

> .inspect b

b: Service {

_handlers: {

_initial: [],

before: [ EventHandler { before: '*', handler: [Function (anonymous)] } ],

on: [],

after: [],

_error: []

},

name: 'Service',

options: {}

}Remember that the handler itself, represented in this .inspect output with [Function (anonymous)], is defined to be invoked on any event (*) and looks like this: eve => console.log(eve.event, eve.data).

At this point a second handler was added, but for the on phase, like this (I've taken the liberty to add an extra value "(on)" in the console.log call here so we can understand things a bit better when we see the output):

> b.on('xxxxx', eve => console.log(eve.event, eve.data, "(on)"))Then an event was sent to b, with send (again, to make things a bit clearer -- i.e. avoid any undefined values being shown -- I am including a payload of {bar:11} here):

> await b.send('xxxxx',{bar:11})

xxxxx { bar: 11 }

xxxxx { bar: 11 } (on)The first log record is from the handler registered for the before phase (i.e. the first handler we created for b), and the second is the one we just registered for the on phase.

So far so good.

But.

After adding a second on handler in a similar way, so that b has one before phase handler and two on phase handlers, like this:

> b.on('xxxxx', eve => console.log("gotcha"))

Service {

_handlers: {

_initial: [],

before: [ EventHandler { before: '*', handler: [Function (anonymous)] } ],

on: [

EventHandler { on: 'xxxxx', handler: [Function (anonymous)] },

EventHandler { on: 'xxxxx', handler: [Function (anonymous)] }

],

after: [],

_error: []

},

name: 'Service',

options: {}

}... we do not see that handler's output when transmitting another event (a request) to b with send:

> await b.send('xxxxx',{car:11})

xxxxx { car: 11 }

xxxxx { car: 11 } (on)However.

We do see that handler's output when transmitting an event (a message) to b with emit:

> await b.emit('xxxxx',{cdr:11})

xxxxx { cdr: 11 }

xxxxx { cdr: 11 } (on)

gotchaWhat's going on, as Daniel explains, is that for synchronous requests, the interceptor pattern is honoured. In other words, for handlers in the on phase, if the first handler breaks the chain by not calling next(), any subsequent handlers registered for that on phase are not called - the processing is deemed to be complete. And there was no next() call in the on phase handler we just defined:

> b.on('xxxxx', eve => console.log(eve.event, eve.data, "(on)"))Note that this rule, this interceptor stack processing, is for requests. Not messages. So when processing an event message, initiated with emit (rather than send), every handler registered for the on phase gets called. And this is why we see "gotcha", which is what the second handler registered for the on phase outputs.

This was a conscious decision by the CAP developers, in that it makes more sense when handling asynchronous messages to have all handlers have a chance to fire. Especially because the asynchronous context also implies there's nothing to return, nothing to send back, anyway (unlike when handling an incoming request, for which a response is expected, synchronously).

Let's prove this to ourselves now, by creating a third service c, and defining the same handlers -- one for the before phase and two for the on phase -- except this time we'll ensure that the chain is not broken by the first on phase handler, by receiving and using the next function reference passed to it (we'll practise using the REPL's multiline editor facility):

> .editor

// Entering editor mode (Ctrl+D to finish, Ctrl+C to cancel)

(c = new cds.Service)

.before('*', eve => console.log(eve.event, eve.data))

.on('xxxxx', (eve, next) => { console.log(eve.event, eve.data, "(on)"); next() })

.on('xxxxx', eve => console.log("gotcha"))

Service {

_handlers: {

_initial: [],

before: [ EventHandler { before: '*', handler: [Function (anonymous)] } ],

on: [

EventHandler { on: 'xxxxx', handler: [Function (anonymous)] },

EventHandler { on: 'xxxxx', handler: [Function (anonymous)] }

],

after: [],

_error: []

},

name: 'Service',

options: {}

}Now for the moment of truth9:

> await c.send('xxxxx',{mac:11})

xxxxx { mac: 11 }

xxxxx { mac: 11 } (on)

gotchaBecause the first on phase handler didn't break the chain, the second one (that outputs "gotcha") got a chance to run too.

Nice!

Remember also that while

onphase handlers are executed in sequence, allbeforephase handlers are executed in parallel, as are allafterphase handlers.

cds.Event and cds.Request

Finishing off this exploration, at around 49:56, Daniel talks a bit more about the difference between a (synchronous) request and an (asynchronous) message. In passing, he reveals how simple it also is to instantiate such things in the REPL.

First, a request:

> req = new cds.Request({event:'foo',data:{foo:11}})

Request { event: 'foo', data: { foo: 11 } }Now, a message (event):

> msg = new cds.Event({event:'foo',data:{foo:11}})

EventMessage { event: 'foo', data: { foo: 11 } }Observe how similar the creation and content of each of these objects is.

In fact, the illustration of how similar they are, or rather how related they are, can be found in the cds.Events section of Capire. Specifically:

- the cds.Event section explains that the

cds.Eventclass "serves as the base class forcds.Request" - the cds.Request section shows that the

cds.Requestclass extends thecds.Eventclass, notably with methods that include sending back a response, such asreq.reply()

Incidentally, if you're interested to learn more about how Daniel got his instance of VS Code to attach to the Node.js REPL process, see Node.js debugging in VS Code.

Remote services, proxies, and abstraction

Coming almost to the end of this part, at around 53:50, Daniel explains briefly in the very limited time left about remote services.

To quote Daniel:

"A remote service is a service that we create locally but which happens to be a proxy to whatever remote thing there is"

As we're learning, CAP services are "just" agnostic event machines that can receive and forward messages. Especially when we use the '*' "any" event when registering handlers, we are reminded of Smalltalk's #doesNotUnderstand mechanism that we touched upon in part 4.

What supports this power and flexibility is another core feature of CAP's design and philosophy: abstraction. An essential example of this is CQL (and the machine-readable version CQN), which allows CAP to dispatch events, and their payloads, to various services, including database services and protocol handlers, where the payload is crystallised in CQN, a superset of all database layer languages and protocol mechanics. See also the earlier CQL > SQL section.

This seems an ideal potential starting point for the next part - as we ran out of time at this point.

Until next time - and I hope these notes are useful - let me know in the comments!

Footnotes

- I know this seems obvious, but sometimes it's helpful to stare at the obvious for a while, in a "kata" style of memory reinforcement.

2. Which is Suppliers, Products and Categories, with relationships between them (if you want to peruse such a service, there's one available here).

3. You can paste multiple lines into the REPL, or edit multiple lines yourself, using the .editor REPL command (see the Commands and special keys section of the Node.js REPL documentation). You can also use template literals (`...`) for multiline strings.

4. See the Improved $expand section of my talk on OData V4 and SAP Cloud Application Programming Model for more on the complex $expand value.

5. I know that car is just a subsequent value derived from bar, but I like to think that it's a respectful reference to a core building block of the language that provided so much fundamental thinking for functional programming, which in turn has informed CAP. I'm talking of course about the primitive operation car in Lisp.

6. That this is a cds watch based affordance is further underlined when Daniel starts up the bookstore service in the cds REPL with cds r --run cap/samples/bookstore/ at around 28:31 and receives the error No credentials configured for "ReviewsService", because the "check ~/.cds-services.json and mock if nothing found" design time feature is only invoked with cds watch.

7. At this point in the live stream I remark on Daniel's choice of three characters for his parameter names reflecting some lovely historical Unix trivia, in that the system usernames of Unix and C gods such as Thompson, Richie, Kernaghan, Weinberger et al. were always three characters, as we can see in the first column in this historical /etc/passwd file:

dmr:gfVwhuAMF0Trw:42:10:Dennis Ritchie:/usr/staff/dmr:

ken:ZghOT0eRm4U9s:52:10:& Thompson:/usr/staff/ken:

sif:IIVxQSvq1V9R2:53:10:Stuart Feldman:/usr/staff/sif:

scj:IL2bmGECQJgbk:60:10:Steve Johnson:/usr/staff/scj:

pjw:N33.MCNcTh5Qw:61:10:Peter J. Weinberger,2015827214:/usr/staff/pjw:/bin/csh

bwk:ymVglQZjbWYDE:62:10:Brian W. Kernighan,2015826021:/usr/staff/bwk:

uucp:P0CHBwE/mB51k:66:10:UNIX-to-UNIX Copy:/usr/spool/uucp:/usr/lib/uucp/uucico

srb:c8UdIntIZCUIA:68:10:Steve Bourne,2015825829:/usr/staff/srb:(and yes, my favourite shell bash is named after a successor to the shell that Steve Bourne created which was called the Bourne shell (sh), in that "bash" stands for the play on words "Bourne (born) again shell".)

8. It still slightly disturbs me (in a fun way) that the event identifier here (some event) contains whitespace :-)

9. Yes, the choice of the property name mac here was deliberate; three characters, but a reference to a band that wrote a song which I've referenced in the explanation for this example and forms a core part of what we've learned here. Can you guess what the band is and why? :-)

Appendix - test environment with Northbreeze

In working through some of the examples Daniel illustrated, I used the container-based CAP Node.js 8.5.1 environment I described in the Appendix - setting up a test environment in the notes from part 4, with the Northbreeze service (a sort of cut-down version of the classic Northwind service).

When instantiating the container, I avoided making it immediately ephemeral (by not using the --rm option, and giving it a name with --name); I also added an option to publish port 4004 from the container, so the invocation that I used was:

docker run -it --name part5 -p 4004:4004 cap-8.5.1 bashAt the Bash prompt within the container, I then cloned the Northbreeze repository, and started a cds REPL in there:

node ➜ ~

$ git clone https://github.com/qmacro/northbreeze && cd northbreeze

Cloning into 'northbreeze'...

remote: Enumerating objects: 35, done.

remote: Counting objects: 100% (35/35), done.

remote: Compressing objects: 100% (25/25), done.

remote: Total 35 (delta 8), reused 33 (delta 6), pack-reused 0 (from 0)

Receiving objects: 100% (35/35), 20.85 KiB | 577.00 KiB/s, done.

Resolving deltas: 100% (8/8), done.

node ➜ ~/northbreeze (main)

$I then started a cds REPL session and within that session I started up the CAP server on port 4004:

node ➜ ~/northbreeze (main)

$ cds r

Welcome to cds repl v 8.5.1

> cds.test('.','--port',4004)

<ref *1> Test { test: [Circular *1] }

> [cds] - loaded model from 2 file(s):

srv/main.cds

db/schema.cds

[cds] - connect to db > sqlite { url: ':memory:' }

> init from db/data/northwind-Suppliers.csv

> init from db/data/northwind-Products.csv

> init from db/data/northwind-Categories.csv

/> successfully deployed to in-memory database.

[cds] - using auth strategy {

kind: 'mocked',

impl: '../../../usr/local/share/npm-global/lib/node_modules/@sap/cds-dk/node_modules/@sap/cds/lib/srv/middlewares/auth/basic-auth'

}

[cds] - using new OData adapter

[cds] - serving northbreeze { path: '/northbreeze' }

[cds] - server listening on { url: 'http://localhost:4004' }

[cds] - launched at 12/18/2024, 12:05:58 PM, version: 8.5.1, in: 609.947ms

[cds] - [ terminate with ^C ]

>